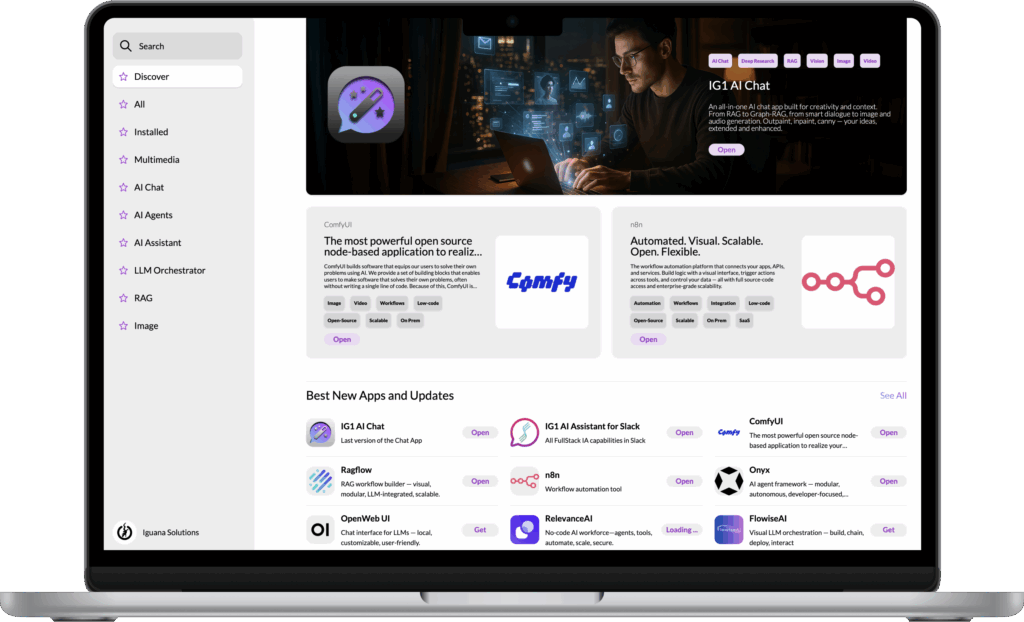

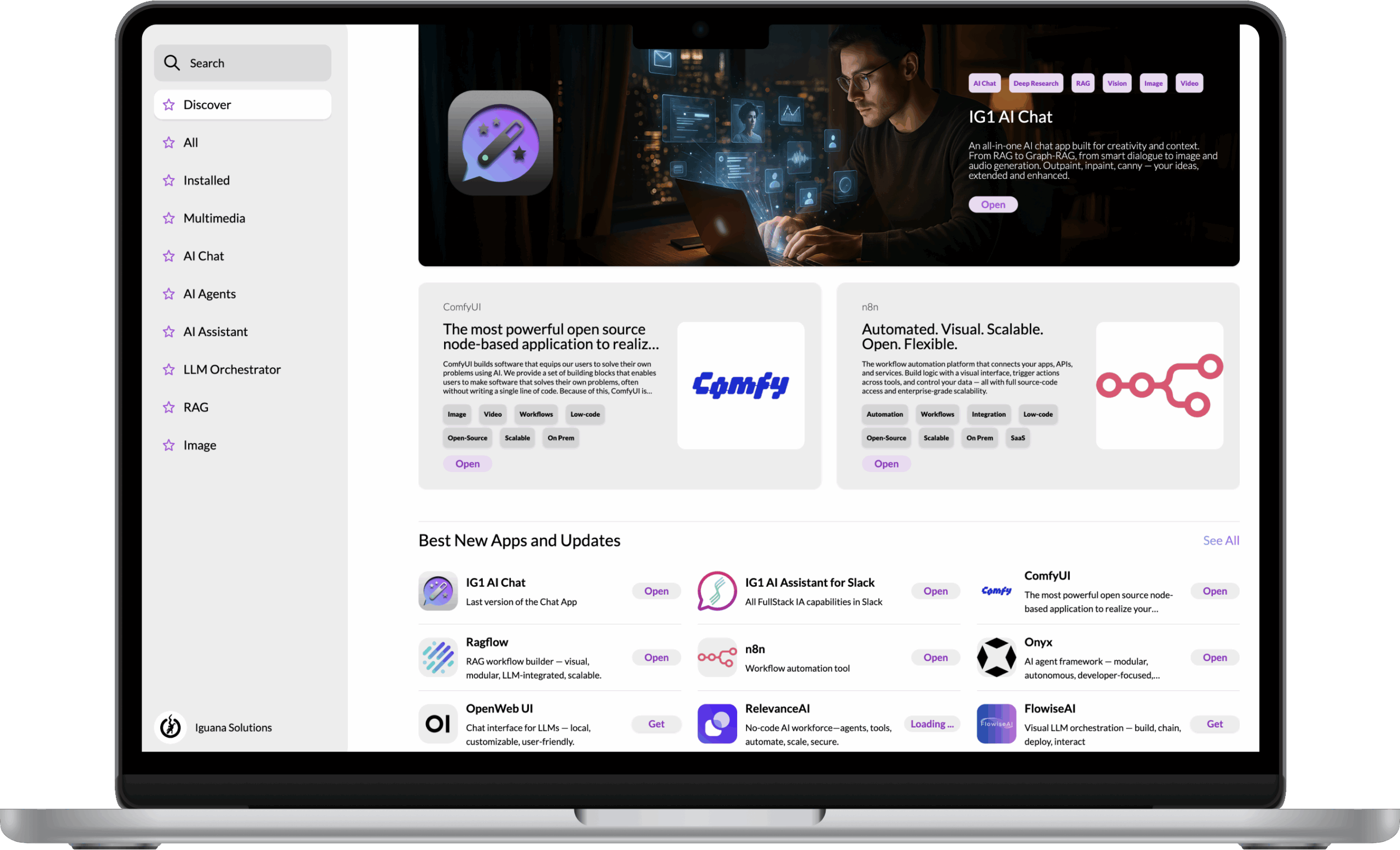

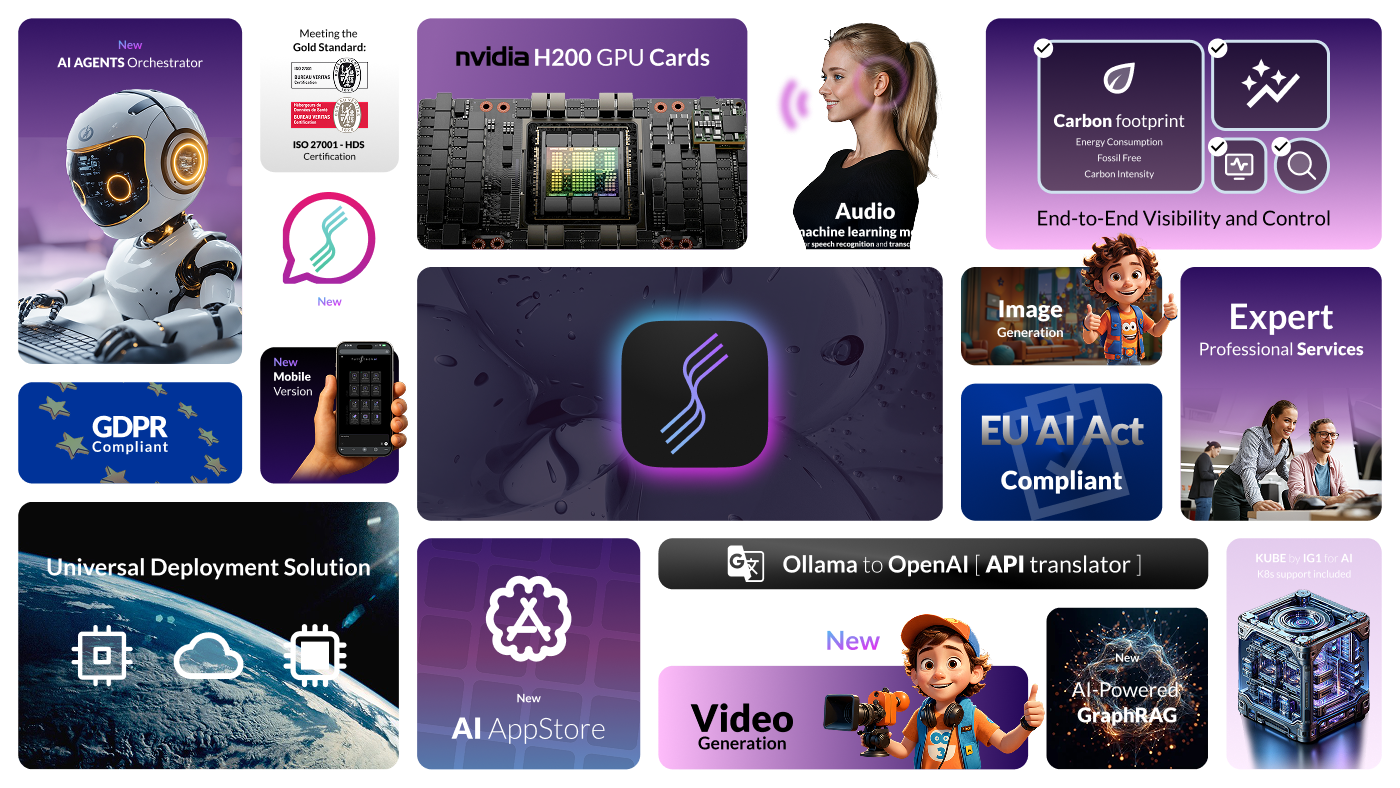

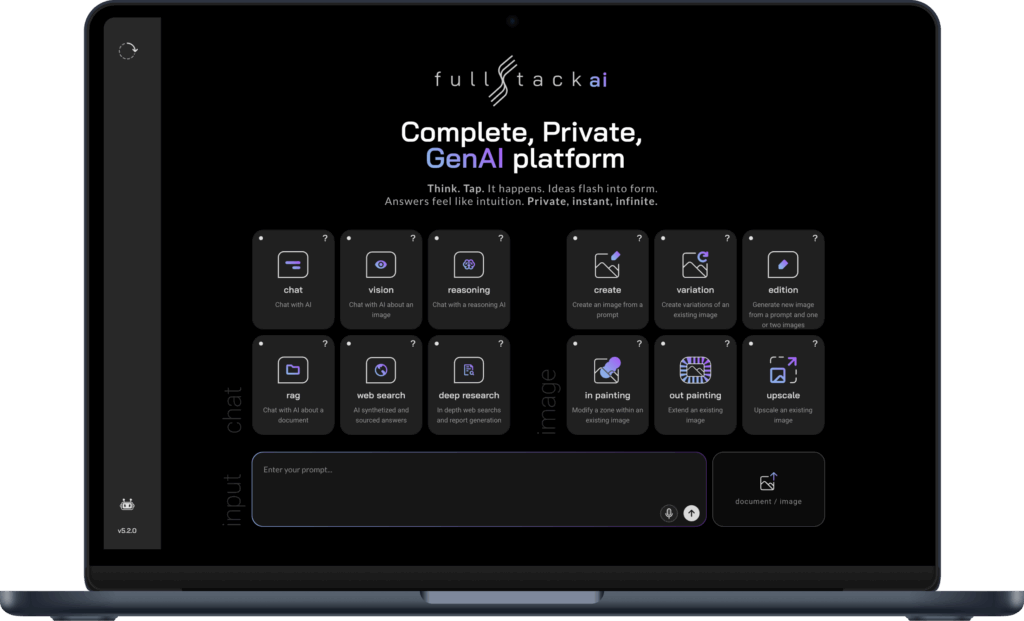

FullStack AI Platform is the most advanced and versatile iteration of our AI infrastructure stack, designed to help teams turn ideas into production at scale. With native support for LLMs, RAG pipelines, copilots, and low-code AI applications, it provides everything needed to build, deploy, and run real-world AI solutions across on-premise, hybrid, or cloud environments. FullStack AI includes reasoning and audio capabilities — making it the ideal foundation for any organization seeking to harness the full power of AI without the complexity.