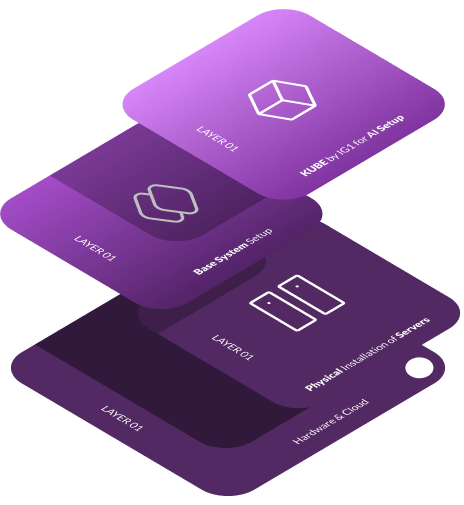

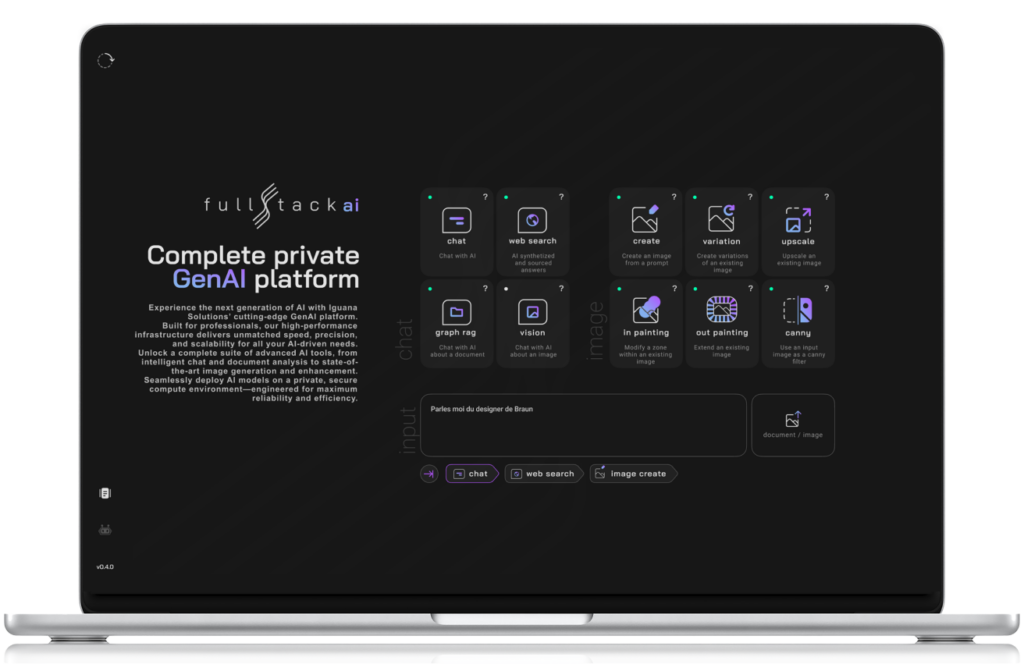

A Complete Solution for AI Infrastructure

Our Full-tack AI platform, designed with both hardware and software solutions, is built to provide seamless integration, offering a comprehensive stack ready for deployment on-premise or in the cloud. From powerful Nvidia GPUs and cloud-ready hardware, to intelligent orchestration tools and advanced AI models, IG1’s stack ensures efficient and scalable Gen AI implementations. We offer flexible configurations tailored to your needs, enabling rapid deployment and immediate productivity with your AI-driven initiatives.