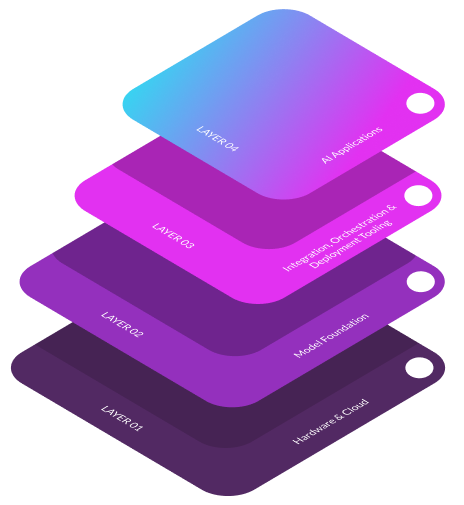

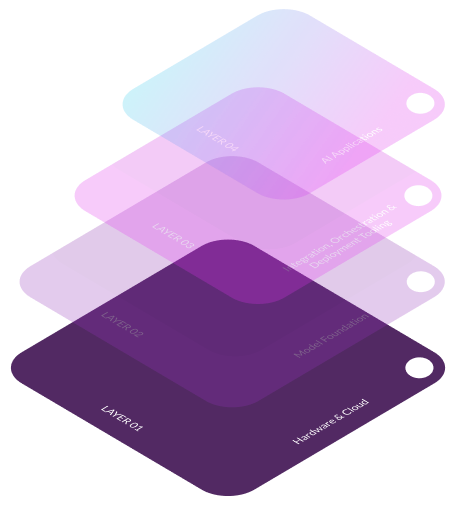

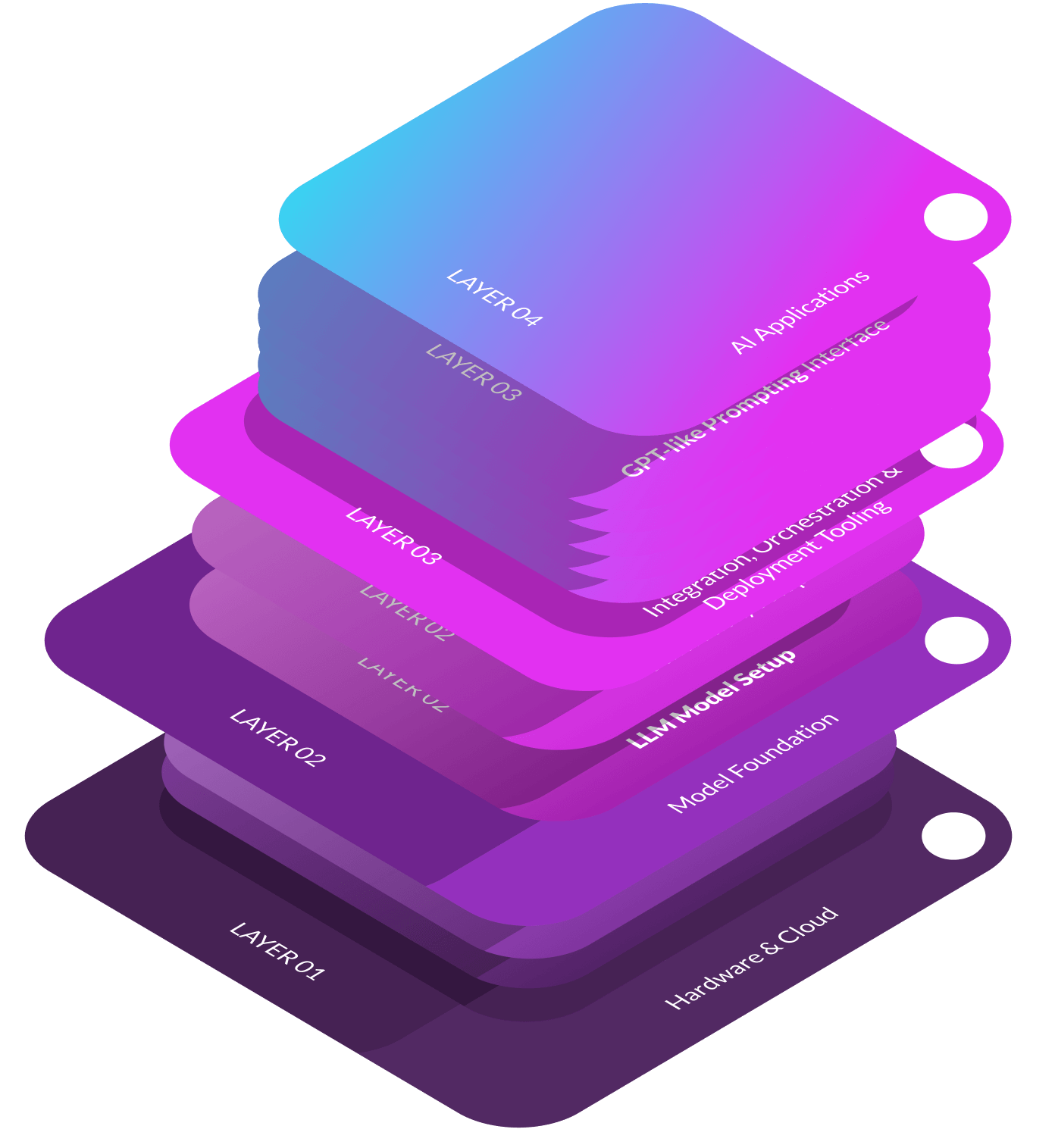

The Generative AI stack represents a multi-layered architecture that enables the development and deployment of sophisticated AI solutions. At its core, this stack encompasses hardware and cloud infrastructure, foundational models, integration tools, and end-user applications. Each layer plays a crucial role in transforming raw data into actionable insights and innovative applications. Understanding this stack is essential for leveraging AI’s full potential, from optimizing computational resources and selecting appropriate models to integrating them seamlessly into production environments. This guide provides a comprehensive breakdown of each layer, illustrating their interconnectedness and significance in the AI ecosystem.

Hardware & cloud infrastructure form the foundational layer of the Generative AI stack, providing the necessary computational power and flexibility for training and deploying AI models.

Cloud infrastructure offers scalable and flexible services from providers like AWS, GCP, and Azure, enabling cost-effective, accessible AI model training and deployment, but may face latency and compliance issues.

Hardware, including GPUs like Nvidia H100 and Cerebras Wafer-Scale Engine, accelerates AI model training and inference by providing high computational power, essential for processing large datasets efficiently.

It represents the core generative models that serve as the building blocks for AI applications. These models, such as GPT-3, BERT, and DALL-E, are pre-trained on extensive datasets, capturing complex patterns and knowledge. They provide a starting point for various AI tasks, from natural language processing (NLP) to image generation.

Models are essential because they enable machines to understand, generate, and manipulate human-like text, images, and other data forms. Their role is to generalize from vast amounts of data, making predictions or generating outputs based on new inputs. This foundational layer allows developers to leverage these sophisticated models without starting from scratch, significantly reducing time and resources.

By fine-tuning these pre-trained models on specific datasets, they can be adapted to specialized tasks, enhancing performance and accuracy. Thus, the Model Foundation layer is indispensable for building efficient, scalable, and high-performing AI solutions.

Integration, Orchestration & Deployment Tooling, is vital because it bridges the gap between core models and practical applications. These tools enable developers to integrate generative models into real-world systems, fine-tune them for specific tasks, and manage their deployment at scale. Without this layer, utilizing advanced AI models would be cumbersome and inefficient. It provides essential capabilities like prompt-tuning, workflow automation, and system integration, ensuring models are not only effective but also seamlessly operational within production environments. This layer is crucial for turning theoretical AI capabilities into practical, usable solutions.

Tools like Dust, LangChain, and Humanloop enable efficient integration, tuning, and deployment of AI models, streamlining development processes and enhancing model performance in production environments.

Platform solutions like OpenAI and Cohere provide APIs for seamless integration of advanced AI models into applications, facilitating easy access to powerful NLP and generative capabilities.

It represents the tangible end-user implementations of generative models, demonstrating their practical value. These applications, such as text, code, image, and video generation tools, leverage advanced AI to automate tasks, enhance productivity, and drive innovation across various domains. By showcasing real-world uses of AI, this section highlights how generative models can solve specific problems, streamline workflows, and create new opportunities. Without this layer, the benefits of advanced AI would remain theoretical, and users would not experience the transformative impact of these technologies in their daily lives.

Standalone applications like Jasper and Copy.AI independently utilize generative models to provide specialized services such as content creation, enhancing productivity and creativity without relying on external platforms.

Bolt-on applications like Notion AI and GitHub Copilot integrate AI capabilities into existing platforms, enhancing their functionality with features like text generation, task automation, and code completion.

This guide explains how we set up Gen AI infrastructure using KUBE by IG1. It starts with installing servers and NVidia GPUs, and setting up the basic software. Then, we configure KUBE by IG1 to manage virtual machines and ensure everything is connected properly. We download and optimize the LLM AI model, integrate it with a system that improves responses, and set up user-friendly interfaces for interacting with the AI. Finally, we test the system thoroughly, check its performance, and set up monitoring tools to keep it running smoothly. This ensures a robust and efficient AI setup.

Presentation of the Plug n Play AI Platform by Iguana Solutions with an overview of the platform and its features: infrastructure, LLM & RAG, orchestrator and supervision, chat, copilot, no-code, API…

Discover CTO of EasyBourse’ testimonial on his use of the platform.

“ With our previous partner, our ability to grow had come to a halt.. Opting for Iguana Solutions allowed us to multiply our overall performance by at least 4. “

Cyril Janssens

CTO, easybourse

Embark on your DevOps journey with Iguana Solutions and experience a transformation that aligns with the highest standards of efficiency and innovation. Our expert team is ready to guide you through every step, from initial consultation to full implementation. Whether you’re looking to refine your current processes or build a new DevOps environment from scratch, we have the expertise and tools to make it happen. Contact us today to schedule your free initial consultation or to learn more about how our tailored DevOps solutions can benefit your organization. Let us help you unlock new levels of performance and agility. Don’t wait—take the first step towards a more dynamic and responsive IT infrastructure now.